Long ago, I toured West Germany, visiting some family friends near Hanover. They suggested I go see Duderstadt, a picturesque town nearby (see picture of it below).

My wife, Nancy, and I drove into Duderstadt and walked around. It was indeed gorgeous, but very strange. Not a person was in sight. Every shop was closed. In the center of the town was a beautiful church. We reasoned that churches are always open. We walked to the church door, I stretched out my hand to open it, but inches away the door burst open. An entire wedding party streamed out into the street. The church was packed to the rafters with happy people, now following the bride and groom out of the church. Mystery solved.

If social scientists came to Duderstadt when we did but failed to see the wedding, they might make all sorts of false conclusions. An economist might see the empty shops and conclude that the economy of rural Germany is doomed, due to low productivity. A demographer might agree and blame this on the obviously declining workforce. But looking just the thickness of a church door beneath the surface, all could immediately understand what was happening.

My point here is a simple one. I am a quant. I believe in numbers and rigorous research designs. But at the same time, I also want to understand what is really going on, and the main numbers rarely tell the whole story.

I was thinking about this when I read the rather remarkable study by Carolyn Heinrich and her colleagues (2010), cited in my two previous blogs. Like many other researchers, she and her colleagues found near-zero impacts for Supplemental Educational Services. At the time this study took place, this was a surprise. How could all that additional instructional time after school not make a meaningful difference?

But instead of just presenting the overall (bad) findings, she poked around town, so to speak, to find out what was going on.

What she found was appalling, but also perfectly logical. Most eligible middle and high school students in Milwaukee who were offered after-school programs either failed to sign up, or if they did sign up, did not attend even a single day, or if they did attend a single day, they attended irregularly, thereafter. And why did they not sign up or attend? Most programs offered attractive incentives, such as iPods, very popular at the time, so about half of the eligible students did sign up, at least. But after the first day, when they got their incentives, students faced drudgery. Heinrich et al. cite evidence that most instruction was either teachers teaching immobile students, or students doing unsupervised worksheets. Heinrich et al.’s technical report had a sentence (dropped in the published report), which I quoted previously, but will quote again here: “One might also speculate that parents and students are, in fact, choosing rationally in not registering for or attending SES.”

A study of summer school by Borman & Dowling (2006) made a similar observation. K-1 students in Baltimore were randomly assigned to have an opportunity to attend three years of summer school. The summer school sessions included 7 weeks of 6-hour a day activities, including 2 ½ hours of reading and writing instruction, plus sports, art, and other enrichment activities. Most eligible students (79%) signed up and attended in the first summer, but fewer did so in the second summer (69%) and even fewer in the third summer (42%). The analyses focused on the students who were eligible for the first and second summers, and found no impact on reading achievement. There was a positive effect for the students who did show up and attended for two summers.

Many studies of summer school, after school, and SES programs overall (including both) have just reported the disappointing outcomes without exploring why they occurred. Such reports are important, if well done, but they offer little understanding of why. Could after school or summer school programs work better if we took into account the evidence on why they usually fail? Perhaps. For example, in my previous blog, I suggested that extended-time programs might do better if they provided one-to-one, or small-group tutoring. However, there is only suggestive evidence that this might be true, and there are good reasons that it might not be, because of the same attendance and motivation problems that may doom any program, no matter how good, when struggling students go to school during times when their friends are outside playing.

Econometric production function models predicting that more instruction leads to more learning are useless unless we take into account what students are actually being provided in extended-time programs and what their motivational state is likely to be. We have to look a bit below the surface to explain why disappointing outcomes are so often achieved, so we can avoid mistakes and repeat successes, rather than making the same mistakes over and over again.

Correction

My recent blog, “Avoiding the Errors of Supplemental Educational Services,” started with a summary of the progress of the Learning Recovery Act. It was brought to my attention that my summary was not correct. In fact, the Learning Recovery Act has been introduced in Congress, but is not part of the current reconciliation proposal moving through Congress and has not become law. The Congressional action cited in my last blog was referring to a non-binding budget resolution, the recent passage of which facilitated the creation of the $1.9 trillion reconciliation bill that is currently moving through Congress. Finally, while there is expected to be some amount of funding within that current reconciliation bill to address the issues discussed within my blog, reconciliation rules will prevent the Learning Recovery Act from being included in the current legislation as introduced. I apologize for this error.

References

Borman, G. D., & Dowling, N. M. (2006). Longitudinal achievement effects of multiyear summer school: Evidence from the Teach Baltimore randomized field trial. Educational Evaluation and Policy Analysis, 28(1), 25–48. https://doi.org/10.3102/01623737028001025

Heinrich, C. J., Meyer, R., H., & Whitten, G. W. (2010). Supplemental Education Services under No Child Left Behind: Who signs up and what do they gain? Education Evaluation and Policy Analysis, 32, 273-298.

Photo credit: Amrhingar, CC BY-SA 3.0, via Wikimedia Commons

This blog was developed with support from Arnold Ventures. The views expressed here do not necessarily reflect those of Arnold Ventures.

Note: If you would like to subscribe to Robert Slavin’s weekly blogs, just send your email address to thebee@bestevidence.org.

Had the farmer been hoping to sell his whole orchard, and had he taken potential buyers to see this one tree, and offered potential buyers picked apples from this particular branch, then that would be gross cherry-picking. However, he knew (and the Stark Seed Company knew) all about grafting, so instead of using his exceptional branch to fool anyone (note that I am resisting the urge to mention “graft and corruption”), the farmer and Stark could replicate that amazing branch. The key here is the word “replicate.” If it were impossible to replicate the amazing branch, the farmer would have had a local curiosity at most, or perhaps just a delicious occasional snack. But with replication, this one branch transformed the eating apple for a century.

Had the farmer been hoping to sell his whole orchard, and had he taken potential buyers to see this one tree, and offered potential buyers picked apples from this particular branch, then that would be gross cherry-picking. However, he knew (and the Stark Seed Company knew) all about grafting, so instead of using his exceptional branch to fool anyone (note that I am resisting the urge to mention “graft and corruption”), the farmer and Stark could replicate that amazing branch. The key here is the word “replicate.” If it were impossible to replicate the amazing branch, the farmer would have had a local curiosity at most, or perhaps just a delicious occasional snack. But with replication, this one branch transformed the eating apple for a century.

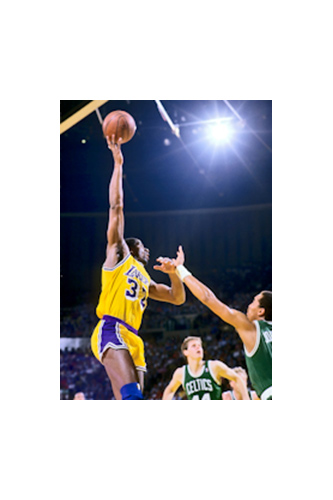

In educational research evaluating replicable programs and practices, our objectives are quite different. Sports reporting builds up heroes, because that’s what readers want to hear about. But in educational research, we want fair, complete, and meaningful evidence documenting the effectiveness of practical means of improving achievement or other outcomes. The problem is that academic publications in education also distort understanding of outcomes of educational interventions, because studies with significant positive effects (analogous to Magic’s best days) are far more likely to be published than are studies with non-significant differences (like Magic’s worst days). Unlike the situation in sports, these distortions are harmful, usually overstating the impact of programs and practices. Then when educators implement interventions and fail to get the results reported in the journals, this undermines faith in the entire research process.

In educational research evaluating replicable programs and practices, our objectives are quite different. Sports reporting builds up heroes, because that’s what readers want to hear about. But in educational research, we want fair, complete, and meaningful evidence documenting the effectiveness of practical means of improving achievement or other outcomes. The problem is that academic publications in education also distort understanding of outcomes of educational interventions, because studies with significant positive effects (analogous to Magic’s best days) are far more likely to be published than are studies with non-significant differences (like Magic’s worst days). Unlike the situation in sports, these distortions are harmful, usually overstating the impact of programs and practices. Then when educators implement interventions and fail to get the results reported in the journals, this undermines faith in the entire research process.