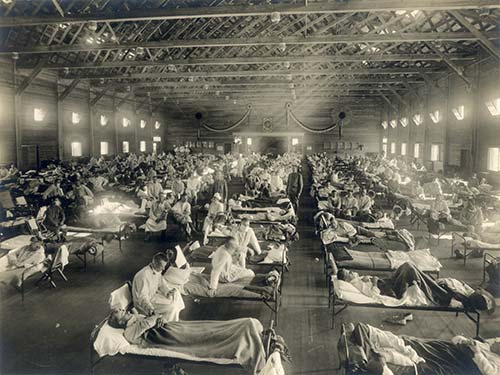

In an article in the May 23 Washington Post, Dr. John Barry, a professor of Public Health and Tropical Medicine at Tulane, wrote about lessons from the 1918 influenza epidemic. Dr. Barry is the author of a book about that long-ago precursor to the epidemic we face today. I found the article chilling, in light of what is happening right now in the Covid-19 pandemic.

In particular, he wrote about a study of Army training camps in 1918. Army leaders prescribed strict isolation and quarantine measures, and most camps followed this guidance. However, some did not. Most camps that did follow the guidance did so rigorously for a few weeks, but then gradually loosened up. The study compared the camps that never did anything to the camps that followed the guidelines for a while. There were no differences in the rates of sickness or death. However, a third set of camps continued to follow the guidance for a much longer time. These camps saw greatly reduced rates of sickness and death.

Dr. Barry also gave an example from the SARS epidemic in the early 2000’s. President George W. Bush wanted to honor the one hospital in the world with the lowest rate of SARS infection among staff. A study of that hospital found that they were doing exactly what all hospitals were doing, making sure that staff maintained sterile procedures. The difference was that in this hospital, the hospital administration made sure that these rules were being rigorously followed. This reminds me of a story by Atul Gawande about the most successful hospital in the world for treating cystic fibrosis. Researchers studied this hospital, an ordinary, non-research hospital in a Minneapolis suburb. The physician in charge of cystic fibrosis was found to be using the very same procedures and equipment that every other hospital used. The difference was that he frequently called all of his patients to make sure they were using the equipment and procedures properly. His patients had markedly higher survival rates than did patients in similar hospitals doing exactly the same (medical) things with less attention to fidelity.

Now consider what is happening in the U.S. in our current pandemic. Given our late start, we have done a pretty good job reducing rates of disease and death, compared to what might have been. However, all fifty states are now opening up, to one degree or another. The basic message: “We have been careful long enough. Now let’s get sloppy.”

Epidemiologists are watching all of this with horror. They know full well what is coming. Leana Wen, Baltimore’s former Health Commissioner, explained the consequences of the choices we are making in a deeply disturbing article in the May 13 Post.

The entire story of what has happened in the Covid-19 crisis, and what is likely to happen now, has a substantial resonance with problems we experience in educational reform. Our field is full of really good ideas for improving educational outcomes. However, we have relatively few examples of programs that have been successful even in one-year evaluations, much less over extended time periods at large scale. The problem is not usually that the ideas turn out not to be so good after all, but that they are rarely implemented with consistency or rigor. Or they are implemented well for a while, but get sloppy over time, or stop altogether. I am often asked how long innovators must stay connected with schools using their research-proven programs with success. My answer is, “forever.” The connection need not be frequent in successful implementers, but someone who knows what the program is supposed to look like needs to check in from time to time to see how things are going, to cheer the school on in its efforts to maintain and constantly improve their implementation, and to help the school identify and solve any problems that have cropped up.

Another thing I am frequently asked is how I can base my argument for evidence-based education on the examples of medicine and other evidence-based fields. “Taking a pill is not like changing a school.” This is true. However, the examples of epidemiology, cystic fibrosis (before the recent cure was announced), dealing with obesity and drug abuse, and many other problems of medicine and public health, actually look quite a bit like the problems of education reform. In medicine, there is a robust interest in “implementation science,” focused on, among other things, getting people to take their medicine or follow a proven protocol (e.g., “eat more veggies”). There is growing interest in implementation science in education, too. Similar problems, similar solutions, in many cases.

Education, public health, and medicine have a lot to learn from each other. In each case, we are trying to make important differences in whole populations. It is never easy, but in each of our fields, we are learning how to cost-effectively increase health and education outcomes at scale. In the current pandemic, I hope science will prevail in both reducing the impact of the disease and in using proven practices, with consistency and rigor, to help schools repair the educational damage children have suffered.

References

Barry, J.M. (2020, May 23). How to avoid a second wave of infections. Washington Post.

Wen, L.S. (2020, May 13). We are retreating to a new strategy on covid-19. Let’s call it what it is. Washington Post.

This blog was developed with support from Arnold Ventures. The views expressed here do not necessarily reflect those of Arnold Ventures.

Note: If you would like to subscribe to Robert Slavin’s weekly blogs, just send your email address to thebee@bestevidence.org.

Nightingale was not only a statistician, she was an innovator among statisticians. Her life’s goal was to improve medical care, public health, and nursing for all, but especially for people in poverty. In her time, landless people were pouring into large, filthy industrial cities. Death rates from unclean water and air, and unsafe working conditions, were appalling. Women suffered most, and deaths from childbirth in unsanitary hospitals were all too common. This was the sentimental Victorian age, and there were people who wanted to help. But how could they link particular conditions to particular outcomes? Opponents of investments in prevention and health care argued that the poor brought the problems on themselves, through alcoholism or slovenly behavior, or that these problems had always existed, or even that they were God’s will. The numbers of people and variables involved were enormous. How could these numbers be summarized in a way that would stand up to scrutiny, but also communicate the essence of the process leading from cause to effect?

Nightingale was not only a statistician, she was an innovator among statisticians. Her life’s goal was to improve medical care, public health, and nursing for all, but especially for people in poverty. In her time, landless people were pouring into large, filthy industrial cities. Death rates from unclean water and air, and unsafe working conditions, were appalling. Women suffered most, and deaths from childbirth in unsanitary hospitals were all too common. This was the sentimental Victorian age, and there were people who wanted to help. But how could they link particular conditions to particular outcomes? Opponents of investments in prevention and health care argued that the poor brought the problems on themselves, through alcoholism or slovenly behavior, or that these problems had always existed, or even that they were God’s will. The numbers of people and variables involved were enormous. How could these numbers be summarized in a way that would stand up to scrutiny, but also communicate the essence of the process leading from cause to effect?

Recently I gave a series of speeches in China, organized by the Chinese University of Hong Kong and Nanjing Normal University. I had many wonderful and informative experiences, but one evening stood out.

Recently I gave a series of speeches in China, organized by the Chinese University of Hong Kong and Nanjing Normal University. I had many wonderful and informative experiences, but one evening stood out.